The need for computing power is growing fast. It’s much faster than what Moore’s law scaling can keep up with. A recent study on computing power advancements shows that demands double every two months.

Neuromorphic computing is a new tech that’s changing the game. It offers energy-efficient AI chips that use only 1/100th the energy of old AI hardware.

This brain-inspired computing method cuts down energy use a lot. It also brings new chances for AI, robotics, and cognitive science.

The Evolution of Computing: From Traditional to Neuromorphic

Neuromorphic computing is a big change in how we solve complex problems. It’s inspired by the brain’s smart and flexible ways. This new approach could solve the problems of old computing methods.

The Limitations of von Neumann Architecture

The von Neumann architecture has been key in computing for years. But, it has big problems with complex AI tasks. It has a “von Neumann bottleneck” because it separates memory and processing. This makes it slow and uses a lot of energy, which is bad for AI.

For example, training big AI models on old hardware takes a lot of energy and time. As AI gets bigger and more complex, making computers more energy-efficient is very important.

| Architecture | Processing Style | Energy Efficiency |

|---|---|---|

| Von Neumann | Sequential | Low |

| Neuromorphic | Parallel | High |

The Birth of Brain-Inspired Computing

Neuromorphic computing is a new way to solve problems, inspired by the brain. It works in parallel and is very efficient. Spiking neural networks (SNNs) are a key part of it, making AI processing more energy-friendly.

This new computing is great for tasks that need to happen fast and use little power. For example, edge AI and IoT devices benefit a lot. Neuromorphic chips use much less energy than old AI hardware.

Neuromorphic computing is a big step towards better AI. As we learn more, we’ll see new uses of low-power AI hardware and cognitive computing architecture. These will change many industries and our lives.

Neuromorphic Processors, Brain-Inspired Computing, Energy-Efficient AI Chips

Neuromorphic engineering is leading to AI systems that are faster and use less energy. This is thanks to neuromorphic processors that copy the brain’s neural networks. They offer a very efficient and flexible way to process AI.

Defining Neuromorphic Computing Architecture

Neuromorphic computing is made to work like the brain. It uses spiking neural networks (SNNs) for fast and efficient information processing. Unlike regular computers, these systems can learn and adapt from the data they handle.

The main features of neuromorphic computing are:

- Distributed processing and memory

- Event-driven computation

- Scalability and flexibility

- Low power consumption

How Brain-Inspired Chips Differ from GPUs and TPUs

Brain-inspired chips, or neuromorphic processors, are very different from GPUs and TPUs. While GPUs and TPUs focus on specific tasks, neuromorphic processors aim to be as efficient and adaptable as the brain.

Here are some key differences:

- Processing Mechanism: Neuromorphic processors use SNNs, which are event-driven, unlike traditional clocked processing of GPUs and TPUs.

- Energy Efficiency: Neuromorphic chips are very energy-efficient, often using much less power than traditional AI hardware.

- Adaptability: Neuromorphic systems can adapt and learn in real-time, like the human brain. GPUs and TPUs, on the other hand, have fixed architectures.

These differences highlight the promise of neuromorphic computing. It could change AI by making it more efficient, scalable, and adaptable.

The Revolutionary Energy Efficiency:1/100th of Traditional AI Power

AI is getting more advanced, but it’s using a lot of energy. Old AI systems eat up a lot of power. This is bad for our energy and the planet.

Traditional AI’s Growing Energy Demands

Old AI systems need more and more power. They do complex tasks with neural networks and artificial intelligence. Data centers, where AI lives, use a lot of electricity.

For example, training one big AI model uses as much energy as hundreds of homes in a year. This is not good for the future, as AI spreads to more areas.

How Neuromorphic Computing Achieves Dramatic Energy Reduction

Neuromorphic computing is a new way to solve energy problems. It works like the brain, using less power. These chips use cognitive computing to work fast and save energy.

“Neuromorphic computing represents a paradigm shift in how we design and use computing systems, enabling us to build AI that is not only more powerful but also more sustainable.”

Neuromorphic chips work like our brains, processing info in bursts. This cuts down energy use a lot.

| Feature | Traditional AI | Neuromorphic Computing |

|---|---|---|

| Energy Consumption | High | Low |

| Processing Style | Continuous | Event-Driven |

| Scalability | Limited by Energy | Highly Scalable |

For more info on AI in apps and machine learning, check out https://digitalvistaonline.com/ai-in-apps-machine-learning/.

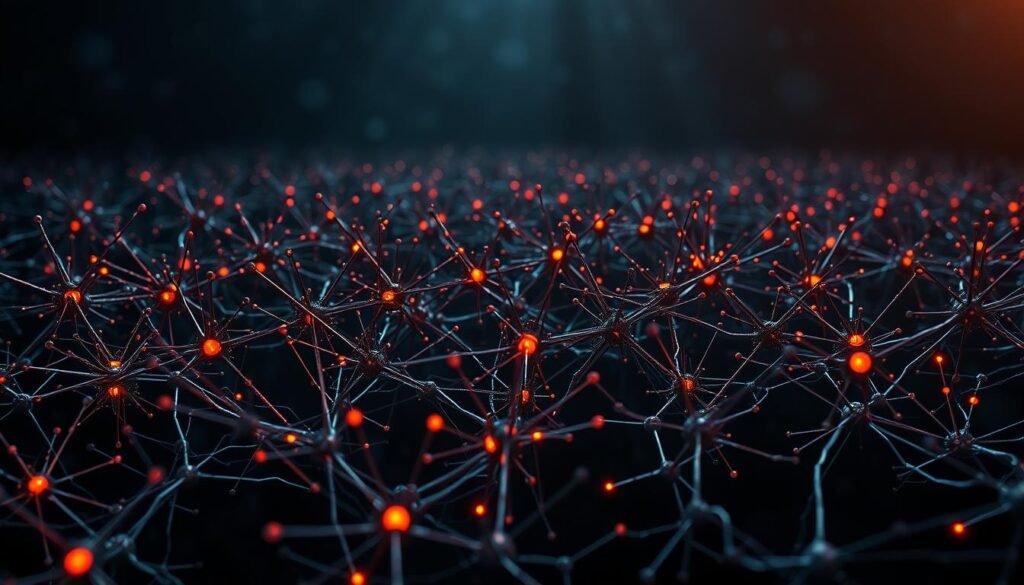

Spiking Neural Networks: The Core Technology Behind Neuromorphic Systems

At the heart of neuromorphic systems is a key technology: spiking neural networks. These networks are made to work like the human brain, making AI processing more efficient. They bring a big leap forward in machine learning and artificial intelligence hardware.

Biological Inspiration: How Neurons Communicate

In our brains, neurons talk through spikes. This idea has led to the creation of spiking neural networks. They send information in bursts, just like our brains do. This is different from regular neural networks that always work, using more energy.

Event-Based Processing vs. Continuous Computing

Regular computers always work, even when there’s no data. But spiking neural networks only work when needed. This saves a lot of energy, making them perfect for advanced processing units in AI.

- Event-based processing cuts down on extra work.

- It uses much less energy.

- This method is like how our brains naturally process information.

Learning Mechanisms in Spiking Networks

Spiking neural networks have special ways to learn, like Spike-Timing-Dependent Plasticity (STDP). This lets them get better at processing data. It’s inspired by how our brains learn, making machine learning more efficient and flexible.

- STDP helps them learn based on when spikes happen.

- They can learn from small amounts of data well.

- This learning method is key for making AI smarter.

By studying and using spiking neural networks, we can make AI systems better. They use artificial intelligence hardware that works like our brains, making AI more powerful and efficient.

5 Pioneering Neuromorphic Chip Architectures Transforming AI

Companies like Intel, IBM, BrainChip, and SynSense are leading in neuromorphic chip development. Their innovative designs are changing AI by making computing more efficient and powerful.

Intel’s Loihi: Self-Learning Neuromorphic Chip

Intel’s Loihi is a chip that learns and adapts on its own. It’s a big step in machine learning accelerators. Loihi works like the brain, processing information in parallel and efficiently.

IBM’s TrueNorth: Million Neuron Cognitive Computing

IBM’s TrueNorth chip is a major breakthrough in neuromorphic computing. It has a million neurons and 4 billion synapses. This neural network architecture is a big leap towards AI that thinks and learns like us.

BrainChip’s Akida: Edge AI Neural Processing

BrainChip’s Akida is made for edge AI. It’s great for edge AI and IoT devices. Akida is low on power, making it perfect for many devices.

SynSense’s Dynap-SE: Ultra-Low Power Neural Processing

SynSense’s Dynap-SE is a chip for neural processing that’s very low on power. It’s efficient for many uses, from IoT devices to complex AI systems. Dynap-SE is a big step in neuromorphic computing architecture, balancing power and performance.

These new chip architectures are pushing AI forward. They’re making computing more efficient and powerful. As these technologies grow, we’ll see big improvements in AI, from edge AI to complex tasks.

7 Real-World Applications Leveraging Low-Power Neuromorphic Computing

Neuromorphic computing is changing many fields with its energy-saving AI chips. It’s making a big impact in IoT devices and smart cities. The low-power neural chips and cognitive computing devices are used in many new ways.

Edge AI and IoT Devices

Neuromorphic computing is changing edge AI by allowing real-time processing on devices with little power. This is great for IoT devices, which can now do complex tasks without needing the cloud. For example, 5G-enabled IoT devices use neuromorphic chips for better data handling.

Autonomous Vehicles and Drones

The autonomous vehicle and drone world is using neuromorphic computing. It’s good for handling lots of sensory data in real-time and saving energy. This tech helps vehicles and drones navigate and make decisions better in tough situations.

Medical Implants and Wearables

In medicine, neuromorphic computing is helping make better implants and wearables. These devices can track health and find problems more easily, thanks to event-based processing. Studies in scientific journals show its promise in medical use.

Smart Cities and Infrastructure

Neuromorphic computing is also being used in smart city systems. It helps manage resources better by analyzing sensor data. This way, cities can use energy, manage traffic, and handle waste more efficiently. The neural network accelerators are key in handling all the data from city systems.

As neuromorphic computing gets better, it will be used in even more ways. It will change many parts of our lives and work.

Technical and Practical Challenges in Neuromorphic Engineering

Neuromorphic computing is very promising but faces many challenges. Creating low-power neuromorphic computing systems is hard. It needs better hardware, new ways to program, and to grow in size.

Hardware Implementation Hurdles

Creating bio-inspired architecture is a big challenge. Making hardware that mimics nature is tough. Also, the materials used in regular computers don’t work well for neuromorphic systems. We need new materials and ways to make them.

The table below shows the main differences between regular and neuromorphic hardware. It highlights the challenges in making neuromorphic systems.

| Feature | Traditional Computing Hardware | Neuromorphic Computing Hardware |

|---|---|---|

| Architecture | Von Neumann Architecture | Bio-inspired, Parallel Processing |

| Processing Style | Synchronous, Clock-based | Asynchronous, Event-driven |

| Material Requirements | Conventional Silicon-based | Advanced Materials (e.g., Memristors) |

Programming Paradigm Shifts

Neuromorphic computing needs a big change in how we program. Regular software is made for one thing at a time. But artificial intelligence chips need programs that can do many things at once. We need new ways to write code that works with neuromorphic systems.

Scaling and Manufacturing Challenges

It’s hard to make neuromorphic systems bigger and better. As chips get more complex, making them is harder and more expensive. We’re looking at new ways to make chips, like 3D stacking and nanotechnology.

In summary, neuromorphic computing is very promising but faces many challenges. To make it work for everyone, we need to keep improving hardware, programming, and how we make chips.

Economic and Environmental Impact of Ultra-Efficient AI Hardware

Neuromorphic computing is a big step in AI technology. It brings big economic and environmental wins. As AI gets more into our lives, we need better computing. Low-power neural networks and cognitive computing chips lead this change, making AI greener.

Data Center Energy Consumption Reduction

Neuromorphic computing cuts down data center energy use a lot. Old data centers use a lot of energy for power and cooling. New chips, like the brain, use much less power.

Studies show neuromorphic computing can cut energy use by up to 100 times compared to old AI systems. This is key as data centers grow with cloud computing and AI needs.

A report by a tech research firm says data centers can save a lot of energy with neuromorphic computing. This means big savings and less harm to the planet. As Generative AI and other AI applications grow, neuromorphic computing’s energy efficiency is key.

Carbon Footprint Implications

Neuromorphic computing also means less carbon footprint. Using machine learning processors that use less energy, data centers can cut down on greenhouse gases. This is good for our planet as we look for greener tech.

“The shift towards neuromorphic computing is not just a technological advancement; it’s a necessary step towards a more sustainable future for AI.” –

Democratizing AI Through Lower Computing Costs

Neuromorphic computing also makes AI cheaper. Old AI hardware is pricey, blocking many from using it. New chips are cheaper and more energy-efficient, opening AI to more people.

- Lower operational costs due to reduced energy consumption

- Increased accessibility for smaller businesses and startups

- Potential for more widespread adoption of AI technologies

In conclusion, ultra-efficient AI hardware has big economic and environmental wins. It cuts down energy use, lowers carbon footprints, and makes AI cheaper. Neuromorphic computing is a big step for AI’s future.

Conclusion: The Future Landscape of Brain-Inspired Computing

The future of brain-inspired computing looks bright. It has many uses, like in AI, robotics, and cognitive science. Bio-inspired processors are changing how we solve complex problems, thanks to parallel processing.

Machine learning accelerators are getting better fast. This means big advances in edge AI, self-driving cars, and medical devices. Neuromorphic computing will be key in making AI smarter and more flexible.

Brain-inspired computing could use much less energy than old AI systems. This could make AI more available to many fields. As research grows, we’ll see even more cool uses of bio-inspired processors and machine learning accelerators.

FAQ

What is neuromorphic computing?

Neuromorphic computing is inspired by the brain. It uses special networks to work like the brain. This makes AI more energy-efficient.

How do neuromorphic processors differ from traditional GPUs and TPUs?

Neuromorphic processors are different because they’re designed like the brain. This makes them use less power. They’re more adaptable and efficient than traditional GPUs and TPUs.

What are the benefits of using neuromorphic computing?

Neuromorphic computing uses much less energy. It’s also scalable and can handle complex data in real-time. This makes it great for edge AI, self-driving cars, and IoT devices.

What is the role of spiking neural networks in neuromorphic systems?

Spiking neural networks are key in neuromorphic systems. They allow for efficient processing and learning. This is inspired by the brain, making data processing more adaptable and efficient.

What are some examples of pioneering neuromorphic chip architectures?

Intel’s Loihi, IBM’s TrueNorth, BrainChip’s Akida, and SynSense’s Dynap-SE are examples. Each offers unique benefits for low-power AI and cognitive computing.

What are the challenges in implementing neuromorphic engineering?

Implementing neuromorphic engineering faces several challenges. These include hardware hurdles, programming shifts, and scaling and manufacturing issues. These must be solved to fully use neuromorphic computing’s benefits.

What is the economic and environmental impact of ultra-efficient AI hardware?

Ultra-efficient AI hardware reduces data center energy use and carbon footprint. It also lowers computing costs. This makes AI more accessible and sustainable for many applications.

How does neuromorphic computing achieve dramatic energy reduction?

Neuromorphic computing uses low-power chips and devices. These are designed to use much less power than traditional AI systems. This leads to energy-efficient AI.

What are the possible applications of neuromorphic computing?

Neuromorphic computing has many uses. It’s good for edge AI, self-driving cars, medical devices, smart cities, and more. It offers benefits in many areas where low-power AI is needed.